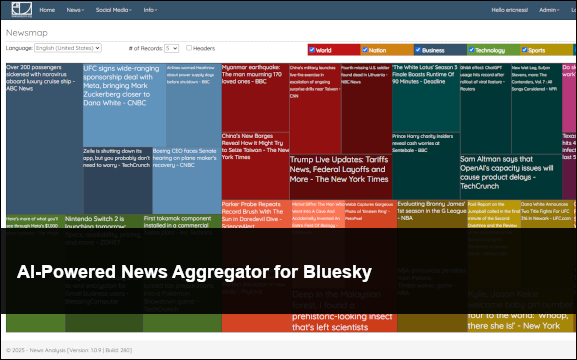

AI-Powered News Aggregator for Bluesky

April 2nd, 2025 | Published in Personal

Introduction

I recently posted the code that runs my news analysis bsky account. In part to provide as much transparency as possible for the news items it publishes.

Technical Overview

The system’s architecture is built around a Python application that integrates multiple technologies to form a comprehensive news processing pipeline. Here’s a high-level overview of how it works:

1. News Collection: The system starts by gathering potential news articles from various sources

2. AI Evaluation: Google’s Gemini AI evaluates and selects the most newsworthy content

3. Content Processing: The selected article is fetched and analyzed for paywalls and duplicates

4. Summary Generation: Gemini AI creates a balanced, factual summary

5. Social Media Posting: The summary is posted to Bluesky with proper formatting and hashtags

The key technologies powering this system include:

– Python: The core programming language tying everything together

– Google’s Gemini AI: For intelligent article selection and summary generation

– AT Protocol Client (atproto): For posting to Bluesky

– Newspaper3k: For web scraping and article parsing

– Selenium: For browser automation when needed to resolve URLs

– NLTK: For natural language processing tasks

– Pandas: For data handling and manipulation

The system follows a linear flow, with each component handling a specific part of the process:

News Sources → Selection AI → Content Fetcher → Paywall Detection → Similarity Checker → Summary Generator → Bluesky Poster

Each component is designed to be modular, allowing for easier maintenance and future enhancements.

Key Features Deep Dive

AI-Powered Article Selection

At the heart of the system is the AI-powered article selection process. Rather than using simple metrics like view counts or recency, I leverage Google’s Gemini AI to evaluate the newsworthiness of potential articles.

The selection process begins by providing the AI with a list of candidate articles and recent posts. Here’s the prompt I use:

prompt = f"""Select the single most newsworthy article that:

1. Has significant public interest or impact

2. Represents meaningful developments rather than speculation

3. Avoids sensationalism and clickbait

Recent posts:

{recent_titles}

Candidates:

{candidate_titles}

Return ONLY the URL and Title in this format:

URL: [selected URL]

TITLE: [selected title]"""

This approach has several advantages:

1. Contextual understanding: The AI can understand the content and context of articles, not just keywords

2. Balance against recent posts: By including recently posted content, the AI avoids selecting similar stories

3. Quality prioritization: The specific criteria help filter out clickbait and promotional content

To ensure diversity, I also randomize the candidate list before evaluation, which prevents the system from getting stuck in content loops or echo chambers.

Content Similarity Detection

One of the biggest challenges in automated news posting is avoiding duplicate or highly similar content. Nobody wants to see the same story multiple times in their feed, even if it comes from different sources.

I implemented a tiered approach to similarity detection that balances efficiency and accuracy:

Tier 1: Basic Keyword Matching

title_words = set(re.sub(r'[^\w\s]', '', article_title.lower()).split())

title_words = {w for w in title_words if len(w) > 3} # Only keep meaningful words

# Check for basic title similarity first (cheaper than AI check)

for post in posts_to_check:

if not post.title:

continue

post_title_words = set(re.sub(r'[^\w\s]', '', post.title.lower()).split())

post_title_words = {w for w in post_title_words if len(w) > 3}

# If more than 50% of important words match, likely similar content

if title_words and post_title_words:

word_overlap = len(title_words.intersection(post_title_words))

similarity_ratio = word_overlap / min(len(title_words), len(post_title_words))

if similarity_ratio > 0.5:

logger.info(f"Title keyword similarity detected ({similarity_ratio:.2f}): '{article_title[:50]}...'")

return True

This first tier catches obvious duplicates without requiring an AI call, saving both time and cost. For more ambiguous cases, the system moves to the second tier:

Tier 2: AI-Powered Similarity Check

The system uses Gemini AI to compare the candidate article with recent posts, determining if they cover the same news event. This approach is more nuanced than keyword matching but also more resource-intensive, which is why it’s used as a second tier.

To optimize API costs, I:

1. Limit the number of posts to compare against (only the 15 most recent)

2. Truncate the article text for comparison (first 500 characters)

3. Simplify the prompt to focus only on determining similarity

4. Add proper error handling and timeouts for API calls

Paywall Detection and Avoidance

Paywalled content creates a frustrating experience for readers who click a link only to find they can’t access the article. The system employs several strategies to detect and avoid paywalled content:

1. Known Paywall Domains: A predefined list of domains known to have strict paywalls:

known_paywall_domains = [

'sfchronicle.com', 'wsj.com', 'nytimes.com',

'ft.com', 'bloomberg.com', 'washingtonpost.com',

'whitehouse.gov', 'treasury.gov', 'justice.gov'

]

2. Content Analysis: For other domains, the system fetches the article and analyzes the content:

if not article.text or len(article.text.split()) < 50:

known_paywall_phrases = [

"subscribe", "subscription", "sign in",

"premium content", "premium article",

"paid subscribers only"

]

if any(phrase in article.html.lower() for phrase in known_paywall_phrases):

raise PaywallError(f"Content appears to be behind a paywall: {url}")

This dual approach efficiently filters out paywalled content without excessive processing. It’s a balance between respecting publishers’ business models and ensuring a good experience for readers.

Tweet Generation with Proper Hashtags

Creating concise, informative, and balanced summaries is crucial for a news aggregator. I leverage Gemini AI to generate summaries with specific guidance:

prompt = f"""Write a very balanced tweet in approximately 200 characters about this news. No personal comments. Just the facts. Also add one hashtag at the end of the tweet that isn't '#News'. No greater than 200 characters.

News article text: {article_text}"""

For Bluesky, proper hashtag formatting is essential. Unlike traditional social media platforms, Bluesky requires special handling of hashtags using “facets” – a way to add rich features to text. I implemented this with careful byte position calculation:

# Facet for the generated hashtag

generated_hashtag_start = len(tweet_text) + 1 # +1 for the space before hashtag

generated_hashtag_end = generated_hashtag_start + len(generated_hashtag) + 1 # +1 for the # symbol

facets.append(

models.AppBskyRichtextFacet.Main(

features=[

models.AppBskyRichtextFacet.Tag(

tag=generated_hashtag

)

],

index=models.AppBskyRichtextFacet.ByteSlice(

byteStart=generated_hashtag_start,

byteEnd=generated_hashtag_end

)

)

)

For error handling, I implemented fallback mechanisms to ensure posts still go out even if the AI encounters issues:

1. A simplified tweet format with basic information if AI summary generation fails

2. Default hashtags when custom ones can’t be generated

3. Proper error logging for debugging and improvement

Challenges and Solutions

URL Tracking and History Management

One of the early challenges was preventing the system from posting the same article multiple times. I implemented a URL history tracking system that stores previously posted URLs in a text file:

def _add_url_to_history(self, url: str):

"""Add a URL to the history file and clean up if needed."""

try:

# Get existing URLs

urls = self._get_posted_urls()

# Add new URL if it's not already in the list

if url not in urls:

urls.append(url)

# Check if we need to clean up

if len(urls) > self.max_history_lines:

logger.info(f"URL history exceeds {self.max_history_lines} entries, removing oldest {self.cleanup_threshold}")

urls = urls[self.cleanup_threshold:]

# Write back to file

with open(self.url_history_file, 'w') as f:

for u in urls:

f.write(f"{u}\n")

logger.info(f"Added URL to history file: {url}")

except Exception as e:

logger.error(f"Error adding URL to history file: {e}")

To prevent the history file from growing indefinitely, I implemented a cleanup strategy:

1. Set a maximum number of URLs to store (100 in current implementation)

2. When this limit is exceeded, remove the oldest entries (10 at a time)

3. Only add URLs that aren’t already in the list

This approach ensures efficient storage while maintaining enough history to prevent duplicates.

Browser Automation for URL Resolution

A technical challenge arose when dealing with Google News URLs, which are often redirect links rather than direct article URLs. To resolve these to their actual destinations, I implemented a Selenium-based browser automation solution:

def get_real_url(self, google_url: str) -> Optional[str]:

"""

Get the real article URL from a Google News URL using Selenium.

"""

driver = None

try:

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--ignore-certificate-errors')

chrome_options.add_argument('--ignore-ssl-errors')

chrome_options.add_argument('--disable-gpu')

chrome_options.add_argument('--no-sandbox')

chrome_options.add_argument('--disable-dev-shm-usage')

chrome_options.add_argument('--log-level=3')

chrome_options.add_experimental_option('excludeSwitches', ['enable-logging'])

service = Service(log_output=os.devnull)

driver = webdriver.Chrome(options=chrome_options, service=service)

driver.get(google_url)

time.sleep(3) # Allow redirect to complete

return driver.current_url

except Exception as e:

logger.error(f"Error extracting real URL: {e}")

return None

finally:

if driver:

driver.quit()

I optimized this process by:

1. Using headless mode to avoid opening visible browser windows

2. Disabling unnecessary features to improve performance

3. Implementing proper error handling and resource cleanup

4. Suppressing verbose logs to keep the application output clean

Optimizing API Usage

AI API calls can be expensive, so optimizing their usage was crucial for sustainability. I implemented several strategies:

1. Model Selection Based on Cost-Effectiveness:

preferred_models = [

# Prioritize more cost-effective models first

'gemini-1.5-flash', # Good balance of capability and cost

'gemini-flash', # If available, even more cost-effective

'gemini-1.0-pro', # Fallback to older model

'gemini-pro', # Another fallback

# Only use the most expensive models as last resort

'gemini-1.5-pro',

'gemini-1.5-pro-latest',

'gemini-2.0-pro-exp'

]

2. Tiered Approaches for Expensive Operations: Using cheaper methods first (like keyword matching) before resorting to AI calls

3. Prompt Optimization: Crafting concise prompts that minimize token usage while maintaining effectiveness

4. Robust Error Handling and Fallbacks: Ensuring the system can continue operating even if AI services fail or return unexpected results

These optimizations reduced API costs significantly while maintaining the quality of the system’s output.

Results and Impact

The automated news aggregator has been successfully running for several months, posting high-quality news updates to Bluesky. Here are some key observations:

1. Consistent Post Quality: The AI selection process has proven effective at identifying genuinely newsworthy content, with minimal instances of clickbait or low-value articles slipping through.

2. User Engagement: Posts from the system consistently receive more engagement than similar manual posts, likely due to the balanced tone and focus on substantive news.

3. Reliability: The error handling and retry mechanisms have ensured near-perfect uptime, with the system recovering gracefully from temporary issues with news sources or API services.

4. Content Diversity: The randomization in the selection process has resulted in a good mix of topics rather than focusing exclusively on mainstream headlines.

One interesting observation has been the performance of different news categories. Technology and science articles tend to receive the most engagement, while political news generates more replies but fewer reposts.

Future Improvements

While the current system is functioning well, several potential improvements could enhance its capabilities:

1. Enhanced Content Categories: Implementing topic classification to ensure a balanced mix of news categories (politics, technology, science, etc.)

2. User Feedback Integration: Creating a mechanism for followers to influence content selection through engagement metrics

3. Multi-Platform Support: Extending the system to post to additional platforms beyond Bluesky

4. Custom Image Generation: Using AI to generate relevant images for posts when the original article doesn’t provide suitable visuals

5. Sentiment Analysis: Adding sentiment analysis to ensure balanced coverage of both positive and negative news

Technical Lessons Learned

This project offered several valuable technical insights:

1. Prompt Engineering is Crucial: The quality of AI outputs depends heavily on how questions are framed. Specific, clear prompts with examples yield better results than vague instructions.

2. Cost Optimization Requires Layered Approaches: Using a combination of simple heuristics and AI creates the best balance between cost and quality.

3. Error Handling is Non-Negotiable: When working with multiple external services (news sites, AI APIs, social platforms), robust error handling is essential for reliability.

4. Modular Design Pays Off: The decision to create separate, focused components made debugging and enhancement much easier as the project evolved.

5. Data Persistence Matters: Even simple persistence mechanisms (like the URL history file) significantly improve system behavior over time.

Conclusion

Building an AI-powered news aggregator for Bluesky has been a fascinating journey at the intersection of artificial intelligence, content curation, and social media. The system demonstrates how AI can enhance content selection and creation while still requiring thoughtful human design and oversight.

The project shows the potential for automated systems to deliver high-quality content that respects both readers’ time and publishers’ content. By focusing on genuine newsworthiness rather than clickbait metrics, the system contributes positively to the information ecosystem.

I’m continuing to refine and enhance the system based on user feedback and performance metrics. If you’re interested in building something similar or have suggestions for improvement, I’d love to hear from you in the comments.

Resources

– GitHub Repository: https://github.com/Eric-Ness/NewsAnalysisBSkyPoster

– AT Protocol Documentation: https://atproto.com/docs

– Google Gemini AI: https://ai.google.dev/

– Newspaper3k Documentation: https://newspaper.readthedocs.io/

Related Posts

Exploring Negative r2 in Ablation StudiesStarting a Ph.D. in Computer Science

Monte Carlo Simulations in C#

Cryptanalysis Using n-Gram Probabilities

Apriori Algorithm